Vibe coding sucks, until it doesn’t.

On The Awkward Adolescence of AI Programming

Something interesting is happening in programming. Andrej Karpathy recently coined the term "vibe coding" - you describe what you want in plain English, and an AI writes the code for you.

If you've tried the current tools, you know they kind of work sometimes, but the vibes can go bad pretty quickly. The online reactions are predictably extreme: either "All devs are redundant!" or "Real coders write code!" The truth is somewhere in the middle.

I want to discuss what does and doesn’t work right now, and share how I get the most out of the current tooling.

What is vibe coding, exactly?

Imagine sitting at your computer and saying, "Build me a simple web app that lets users upload photos and apply filters." Then watching as the AI generates the necessary code. That's the basic idea.

Karpathy described his experience as fluid - using voice commands, giving feedback, and watching the AI adjust. This isn't just better autocomplete; it's letting the agent write and debug its own code while you step in only when things go off course.

Putting it in context

I see AI-augmented programming evolving through three distinct phases:

Phase 1: Copilot - You're at your keyboard with an AI assistant suggesting code. It's like a really smart autocomplete, but you're still piecing everything together, testing, and debugging. The AI doesn't understand your entire project.

Phase 2: Autopilot - Vibe coding is an early autopilot. Your AI partner understands entire codebases and can take on complete features. The magic is that it can debug its own work. Your role shifts from implementation to defining what needs to be built, while collaborating on how to build it.

Phase 3: Fleet - You're managing multiple autopilot-style agents. You focus on features, architecture, and user experience while optimizing your team of AI helpers.

We're currently flailing around in the autopilot phase.

The Unvarnished Truth About Today's Tools

I've spent lots of time with tools like Cursor, Windsurf, and Claude Code.

The good news is that these tools can indeed generate working code from natural language descriptions. The bad news is everything else:

- They need a lot of guidance with explicit rules about coding standards, tech stack, and process.

- The rules keep multiplying as you notice repeated mistakes and add to your rules.

- They keep forgetting your rules. Despite huge context windows, context management is necessary and often opaque.

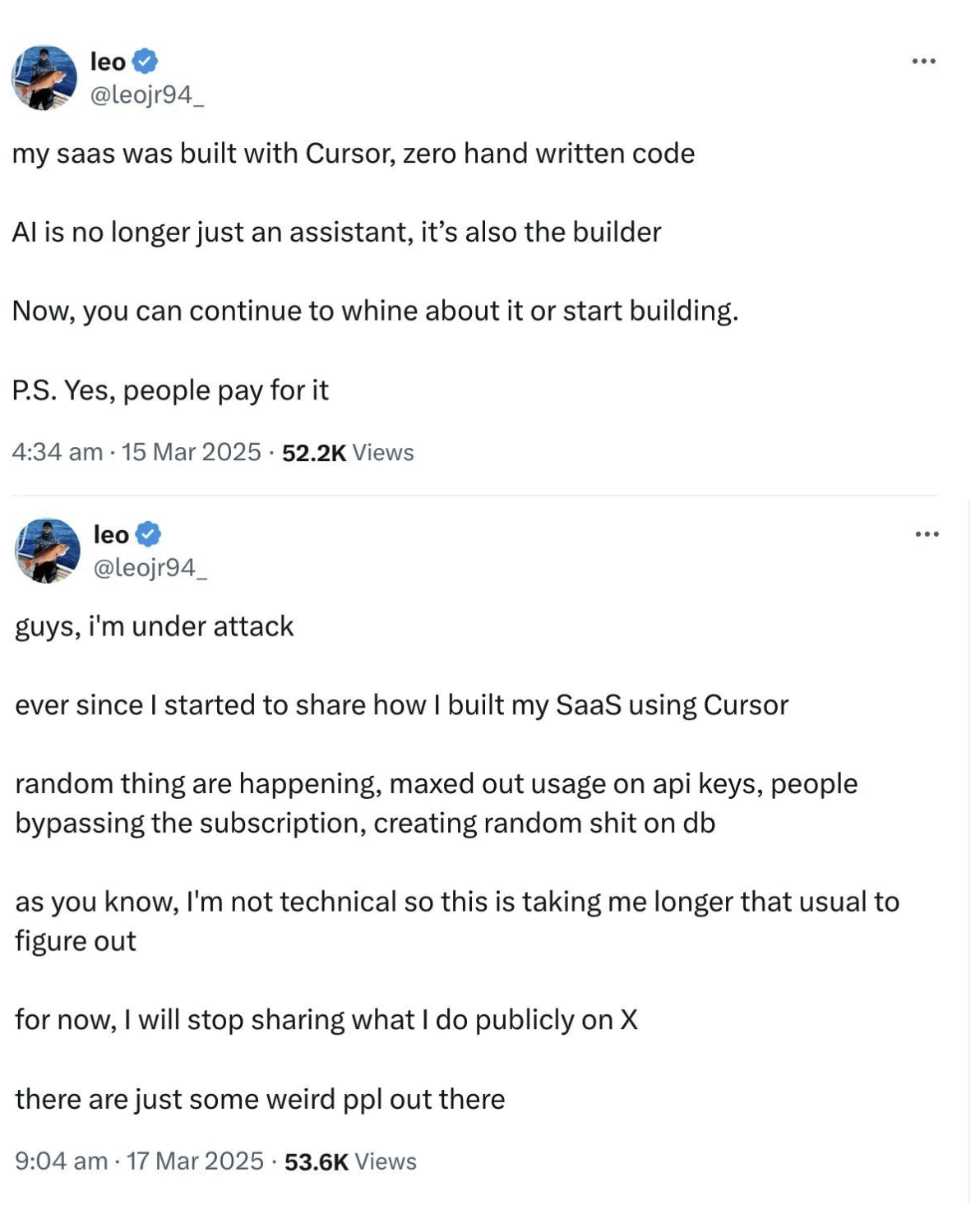

- There are serious security concerns - you can't build production-grade apps in YOLO mode.

- They love to overengineer, turning simple requests into entire frameworks.

- They automate bug creation as efficiently as code creation.

How to Actually Use These Tools

If you want to experiment (and you definitely should), here's what works:

- Be incredibly specific. Create PRD docs and feature specs that you reference in your prompts. Set up project rules for coding standards, tech stack and workflow/process.

- Embrace tight feedback loops. Resist the urge to one-shot entire apps. You’ll get much better results by breaking things up and watching carefully if you do use yolo mode.

- Learn how context management works. The hack here is to regularly clear the context and re-reference your project docs.

- Manage regression bugs with a test suite. Get the model to write the tests first when you start a new feature. This will save you a lot of manual testing and debugging.

- Be selective with MCP servers. The GitHub one is particularly useful, and browser tools if you're working on web UI. But isolate your environments - running arbitrary MCP code on your main machine isn't going to end well.

I’m going to go into a lot more detail on this section in a subsequent post, sharing specific cursor rules, prompts and MCP servers.

Looking Ahead

We're in the awkward adolescence of AI coding tools.

Vibe coding represents the start of a major shift. Its current instantiation is marked by a set of practical limitations that necessitate a cautious and analytical approach in order to benefit.

But the pace of improvement suggests today's limitations could vanish surprisingly quickly. Already, the valuable skills have shifted from syntax knowledge to specification design - knowing exactly what to ask for and how.

If you haven’t done so already, I suggest you jump in. The line between natural language and programming language is blurring, and programming five years from now won't look much like programming today.

Because vibe coding sucks, until suddenly it doesn't.